This week the Online Hate Prevention Institute (OHPI), an Australian Charity which I have the privilege of leading as its CEO, released my major new report into Hate Speech on Facebook. OHPI seeks to facilitate a change in online culture so that hate in all its forms becomes as socially unacceptable online as it is in “real life”. This post provides an over view of OHPI’s new report and its real impact, which extends far beyond exposing specific examples of hateful content.

The report focuses on Antisemitism on Facebook, but it does so as an example of a wider problem with Hate Speech on the social media platform. The major finding, supported by rigorous documentation, is that Facebook does not really understand antisemitism. Given that antisemitism is the most well researched form of hate speech, this has implications for Facebook’s understanding of Hate Speech more widely.

While some forms of antisemitism are recognized and removed by Facebook, complaints about other types of antisemitic content are routinely dismissed. The report is not about failures by front line staff; the problem goes far higher. A draft of the new report was given to Facebook over a month before the final release and even then Facebook failed to take action against the remaining items. Facebook it seems has a reluctance to learn from experts and to recognize additional manifestations of antisemitism.

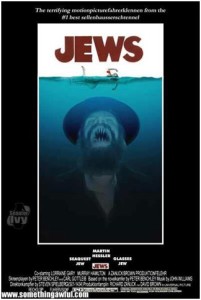

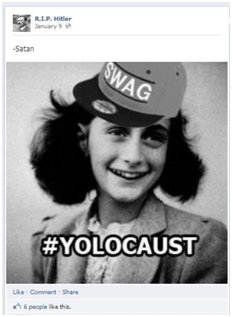

The specific expressions of antisemitism which Facebook refused to recognize include: memes implying Jewish control of governments and the media; memes based on actual Nazi propaganda; memes portraying Jews as demons or monsters; promotion of the Protocols of the Elders of Zion; Holocaust inversion accusing Israel of Nazism; and call for genocide and the destruction of Israel. The report is not about isolated incidents, but rather about a consistent pattern of behaviour, for example, the report documents not one but four pages promoting the Protocols of the Elders of Zion. Reports about all four have been rejected. Facebook just doesn’t get it, even after their attention has been drawn to the problem.

The release of this important report was timed to coincide with the International Day for the Elimination of Racial Discrimination. The report argues that to counter racism as a society, we must begin at the level of the individual. We must each take responsibility for our own actions to ensure we don’t ourselves spread racist ideas, but moreover, it is imperative we take action when we encounter racism – whether as a victim or a bystander. The report praises Facebook for providing the tools that empower users and allow them to report the Hate Speech they encounter. At the same time, we highlight that such tools amount to nothing if Facebook then simply rejects the complaints.

The report makes sixteen recommendations. The first six relate to identifying certain kinds of antisemitism. Recommendation one, for example, states that: “OHPI calls on Facebook to recognise the symbolism of Anne Frank in relation to the Holocaust and to commit to educating all review staff to recognise her picture and to remove memes that make use of it”; and recommendation three which states: “OHPI calls on Facebook to recognise calls for Israel’s destruction as a form of hate speech and to remove them from the platform.”

The remaining eight recommendations relate to general improvement that would help prevent all forms of Hate Speech on Facebook, for example recommendation eight, which suggests six factors that can be used to determine how well an online platform is responding to hate speech.

The listed factors are:

- How easily users can report content

- How quickly the platform responds to user reports

- How accurately the platform responds to reports

- How well the platform recognises and learns from mistakes in its initial response

- How well the platform mitigates against repeat offenders (and specifically including those operating across multiple accounts)

- How well the platform mitigates against repeated posting of the same hate speech by different people

The impact of the report goes far beyond the documented problem of antisemitism. In OHPI’s home state of Victoria (Australia), the State Government’s Human Rights commission welcomed the report, with the Chairman particularly welcoming, “the Institute’s advocacy to the site owners to ensure the resource is not abused by those who would exploit it to vent foul insults and inflame communal discord.” In endorsing the report, the CEO of the peak Jewish community body in Australia commented that “the internet and social media have sometimes provided a megaphone to racist individuals and groups” and how this allows messages that were relegated to the edges of society to be given undue prominence.

The report reiterates the concern expressed when the term “antisemitism 2.0” was first coined in 2008. The significance of antisemitism 2.0 rests in the combination of a viral idea, such as hate speech, and the technology designed to take ideas viral. As the provider of the technology, there is a clear moral obligation on Facebook to ensure that power is used responsibly. The obligation applies as much to the prevention of the abuse of the platform to promote hate speech as it does to the prevention of abuses of privacy, identity theft, and cyber-bullying. Facebook has a moral obligation to ensure the service it provides does not become a tool for the spread of harm within society.

Internationally, the report was welcomed by experts from the USA, Canada, the UK, the Netherland, Israel, Italy, and Argentina. Dr Charles Small called the report “of great international importance” and said it “indentifies an emerging phenomenon which poses danger and challenges for the international human rights policy community”; David Matas said the report “demonstrates in spade that governance structures are badly lacking in Facebook when it comes to hate speech”; Dr David Hirsh described the report as a “well researched and clearly written contribution to debates about online hate speech”; and Ronald Eissens said it “shows what’s really happening on the grounds and the inconsistency of FB’s policies when it comes to hate speech”. Fiamma Nirenstein, former Chairperson of the Italian Parliamentary Inquiry into Antisemitism, called the report “extremely valuable and innovative work” and encouraged law makers around the world to take note of it.

The impact of the report is not in the shocking images it presents, nor in the evidence of pages dedicated to racial vilification and the proliferation racial hatred. A social media platform will always attract such content. The impact is in the revelations, not about Facebook users, but about Facebook itself. The report shines a light on difficulties Facebook is having in recognizing violations of their terms of use. The terms of service already prohibit hate speech, but Facebook seems reluctant to engage and learn from experts so it can better implement this important policy.

Significantly, the report highlights that the challenge of hate speech is an ongoing one; the Protocols are a prime example of this with their introductory contents morphing and changing with each new edition. The report calls on social media companies like Facebook to implement systems for continual learning and improvement. User complaints will be wrongly rejected as new forms of hate are reported for the first time. Entry level staff systematically applying existing knowledge will inevitably come up short. The challenge is what happens next. How will Facebook respond when sun light falls on problematic content and mistaken reviews by front line staff? How will Facebook take the knowledge of experts and apply it as disinfectant to the platform? Is there any value in sunlight as a disinfectant is if action doesn’t follow exposure and public complaints are ignored?

The removal of hate speech by Facebook is not an infringement of free speech, but rather a statement of the company’s values and those of its global community of users. Facebook’s ability to effectively respond to hate speech, however, begins with its ability to identify hate speech in all its forms, if not initially, at least upon reflection. I hope the new report aids Facebook as it faces an increasingly global and complex future.

Dr Andre Oboler is CEO of the Online Hate Prevention Institute and co-chair of the Online Antisemitism Working Group of the Global Forum to Combat Antisemitism. He holds a PhD in computer science from Lancaster University (UK) and is currently completing a JD at Monash University (Australia).

Originally published as: Andre Oboler, “If you can’t Recognize Hate Speech, the sunlight can’t penetrate“, The Louis D. Brandeis Center Blog, March 22 2013